2025

in 3D input domain.png)

Unifying machine learning and interpolation theory via interpolating neural networks

Nature Communications, 16, 8753 2025

Computational science and engineering are shifting toward data-centric, optimization-based, and self-correcting solvers with artificial intelligence. This transition faces challenges such as low accuracy with sparse data, poor scalability, and high computational cost in complex system design. This work introduces Interpolating Neural Network (INN)-a network architecture blending interpolation theory and tensor decomposition. INN significantly reduces computational effort and memory requirements while maintaining high accuracy. Thus, it outperforms traditional partial differential equation (PDE) solvers, machine learning (ML) models, and physics-informed neural networks (PINNs). It also efficiently handles sparse data and enables dynamic updates of nonlinear activation. Demonstrated in metal additive manufacturing, INN rapidly constructs an accurate surrogate model of Laser Powder Bed Fusion (L-PBF) heat transfer simulation. It achieves sub-10-micrometer resolution for a 10 mm path in under 15 minutes on a single GPU, which is 5-8 orders of magnitude faster than competing ML models. This offers a new perspective for addressing challenges in computational science and engineering.

Smooth and sparse latent dynamics in operator learning with jerk regularization

NeurIPS 2025 Workshop ML4PS 2025

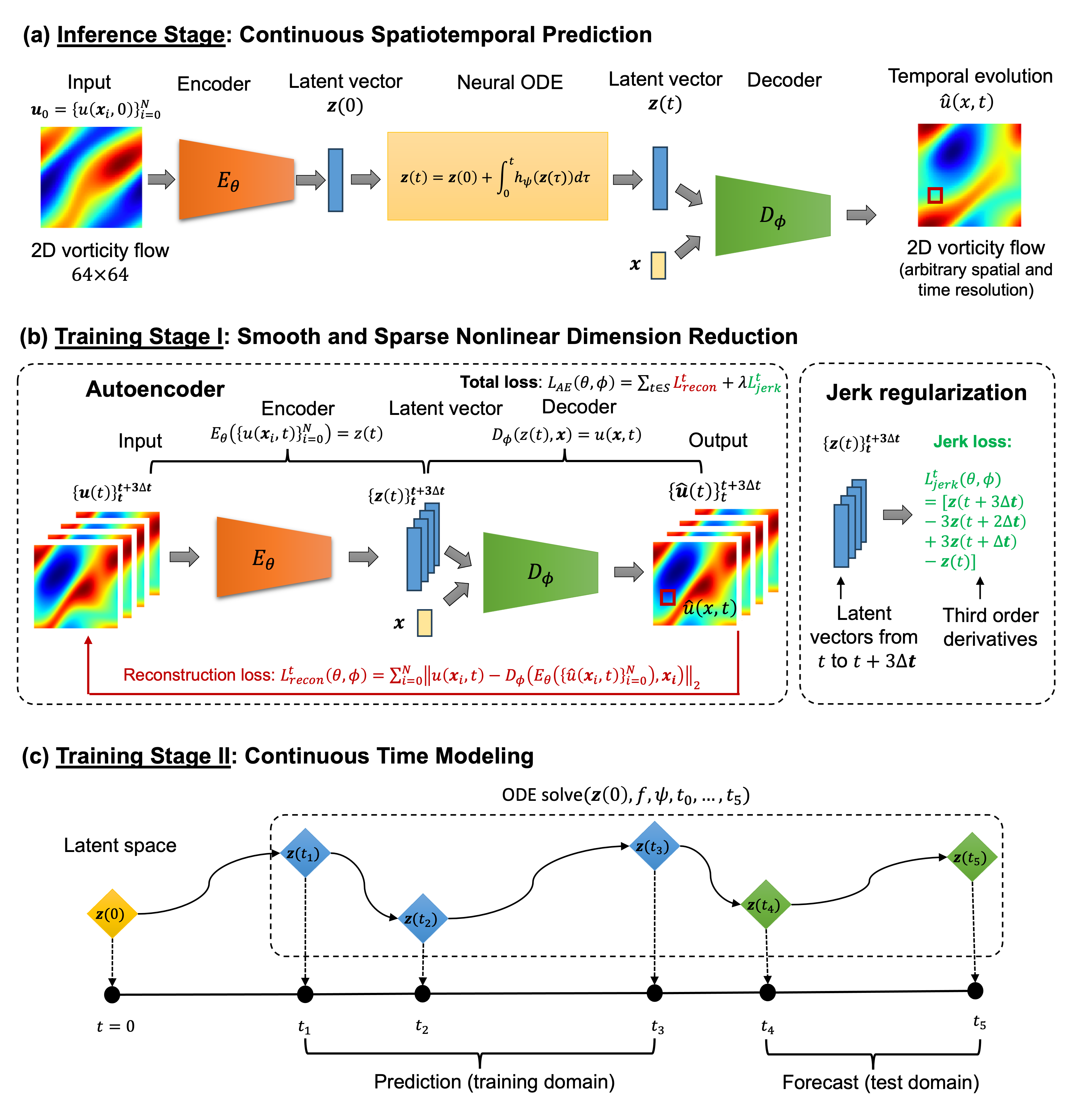

Spatiotemporal modeling is critical for understanding complex systems across various scientific and engineering disciplines, but governing equations are often not fully known or computationally intractable due to inherent system complexity. Data-driven reduced-order models (ROMs) offer a promising approach for fast and accurate spatiotemporal forecasting by computing solutions in a compressed latent space. However, these models often neglect temporal correlations between consecutive snapshots when constructing the latent space, leading to suboptimal compression, jagged latent trajectories, and limited extrapolation ability over time. To address these issues, this paper introduces a continuous operator learning framework that incorporates jerk regularization into the learning of the compressed latent space. This jerk regularization promotes smoothness and sparsity of latent space dynamics, which not only yields enhanced accuracy and convergence speed but also helps identify intrinsic latent space coordinates. Consisting of an implicit neural representation (INR)-based autoencoder and a neural ODE latent dynamics model, the framework allows for inference at any desired spatial or temporal resolution. The effectiveness of this framework is demonstrated through a two-dimensional unsteady flow problem governed by the Navier-Stokes equations, highlighting its potential to expedite high-fidelity simulations in various scientific and engineering applications.

Interpolating Neural Network–Tensor Decomposition (INN-TD): a scalable and interpretable approach for large-scale physics-based problems

International Conference on Machine Learning (ICML) 2025

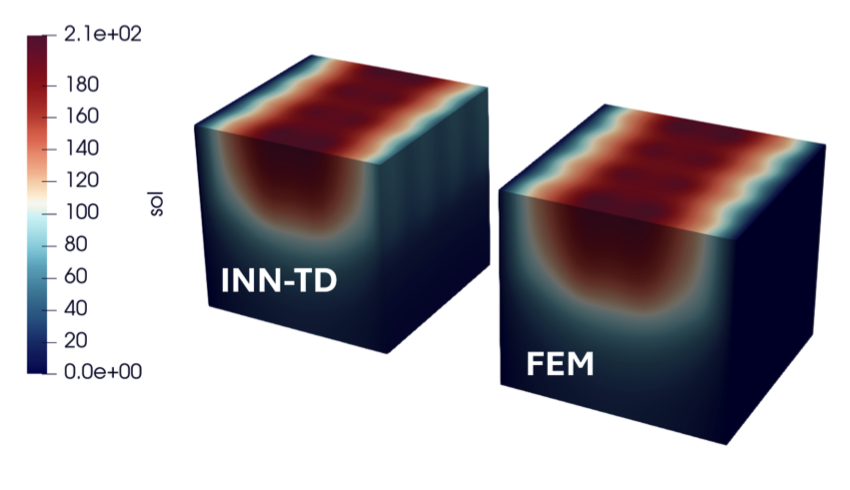

Deep learning has been extensively employed as a powerful function approximator for modeling physics-based problems described by partial differential equations (PDEs). Despite their popularity, standard deep learning models often demand prohibitively large computational resources and yield limited accuracy when scaling to large-scale, high-dimensional physical problems. Their black-box nature further hinders the application in industrial problems where interpretability and high precision are critical. To overcome these challenges, this paper introduces Interpolating Neural Network-Tensor Decomposition (INN-TD), a scalable and interpretable framework that has the merits of both machine learning and finite element methods for modeling large-scale physical systems. By integrating locally supported interpolation functions from finite element into the network architecture, INN-TD achieves a sparse learning structure with enhanced accuracy, faster training/solving speed, and reduced memory footprint. This makes it particularly effective for tackling large-scale high-dimensional parametric PDEs in training, solving, and inverse optimization tasks in physical problems where high precision is required.

2024

Convolutional Hierarchical Deep Learning Neural Networks–Tensor Decomposition (C-HiDeNN-TD): a scalable surrogate modeling approach for large-scale physical systems

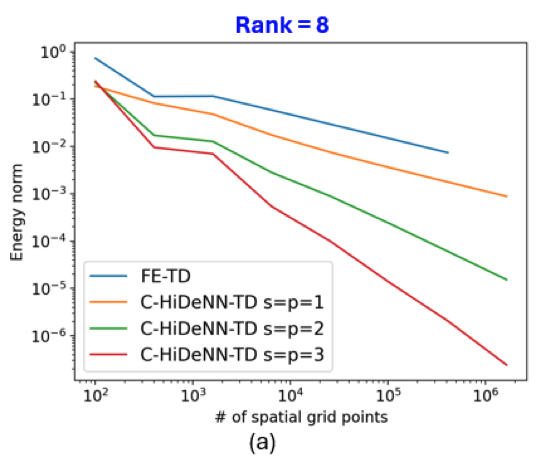

NeurIPS 2024 Workshop D3S3 2024

A common trend in simulation-driven engineering applications is the ever-increasing size and complexity of the problem, where classical numerical methods typically suffer from significant computational time and huge memory cost. Methods based on artificial intelligence have been extensively investigated to accelerate partial differential equations (PDE) solvers using data-driven surrogates. However, most data-driven surrogates require an extremely large amount of training data. In this paper, we propose the Convolutional Hierarchical Deep Learning Neural Network-Tensor Decomposition (C-HiDeNN-TD) method, which can directly obtain surrogate models by solving large-scale space-time PDE without generating any offline training data. We compare the performance of the proposed method against classical numerical methods for extremely large-scale systems.

Benchmark study of melt pool and keyhole dynamics, laser absorptance, and porosity in additive manufacturing of Ti-6Al-4V

Progress in Additive Manufacturing, 1–25 2024

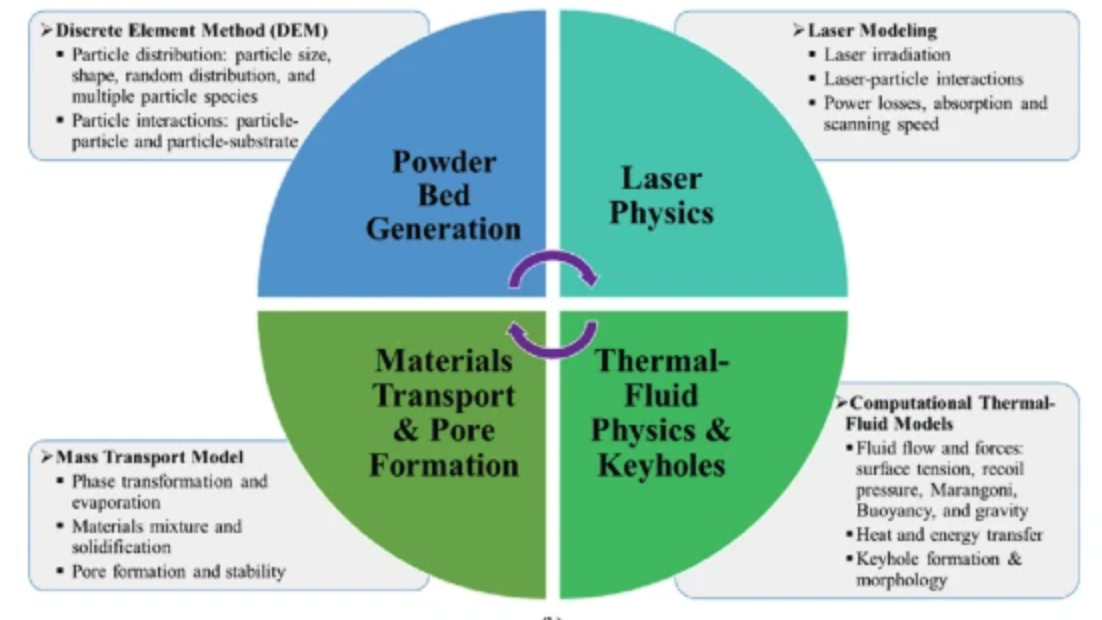

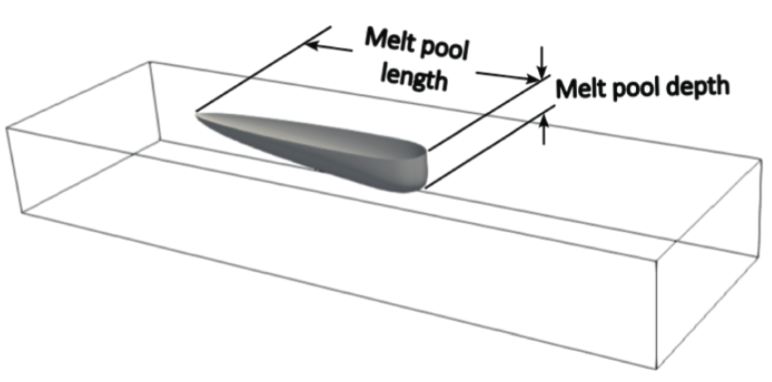

Metal three-dimensional (3D) printing involves a multitude of operational and material parameters that exhibit intricate interdependencies, which pose challenges to real-time process optimization, monitoring, and controlling. The dynamic behavior of the laser-induced melt pool strongly influences the final printed part quality by controlling the absorption of laser power and impacting defect creation, porosity, and surface finish. By leveraging ultrahigh-speed synchrotron X-ray imaging and high-fidelity multiphysics modeling, we identify correlations between laser process parameters, keyhole and melt pool morphologies, laser absorptance, and porosity in metal 3D printing of Ti-6Al-4V, aiding in the development of effective printing strategies. Our models accurately predict the geometries and shapes of melt pools and keyholes, laser absorptance, and the size and shape of keyhole-induced pores during the additive manufacturing processes using different laser parameters, for both bare and powder cases. This work establishes robust correlations among process parameters, melt pool and keyhole morphology, and materials properties. These findings provide valuable insights into the complex interplay among different design factors in metal 3D printing, laying a strong foundation for the development of highly effective and efficient additive manufacturing processes.

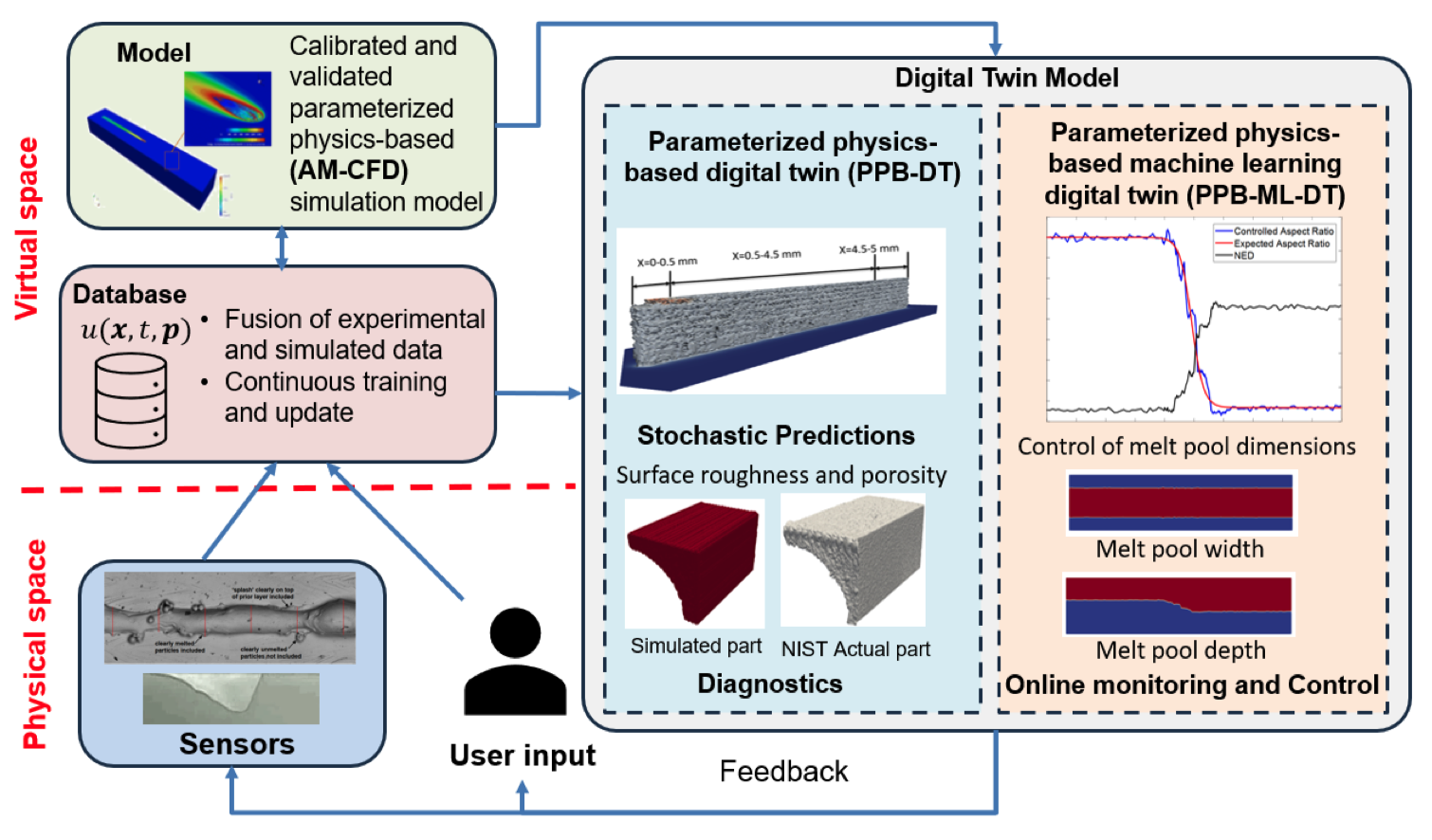

Statistical parameterized physics-based machine learning digital shadow models for laser powder bed fusion process

Additive Manufacturing, 87, 104214 2024

A digital twin (DT) is a virtual representation of physical process, products and/or systems that requires a high-fidelity computational model for continuous update through the integration of sensor data and user input. In the context of laser powder bed fusion (LPBF) additive manufacturing, a digital twin of the manufacturing process can offer predictions for the produced parts, diagnostics for manufacturing defects, as well as control capabilities. This paper introduces a parameterized physics-based digital twin (PPB-DT) for the statistical predictions of LPBF metal additive manufacturing process. We accomplish this by creating a high-fidelity computational model that accurately represents the melt pool phenomena and subsequently calibrating and validating it through controlled experiments. In PPB-DT, a mechanistic reduced-order method-driven stochastic calibration process is introduced, which enables the statistical predictions of the melt pool geometries and the identification of defects such as lack-of-fusion porosity and surface roughness, specifically for diagnostic applications. Leveraging data derived from this physics-based model and experiments, we have trained a machine learning-based digital twin (PPB-ML-DT) model for predicting, monitoring, and controlling melt pool geometries. These proposed digital twin models can be employed for predictions, control, optimization, and quality assurance within the LPBF process, ultimately expediting product development and certification in LPBF-based metal additive manufacturing.

2023

Physics Guided Heat Source for Quantitative Prediction of the Laser Track Measurements of IN718 in 2022 NIST AM Benchmark Test

npj Computational Materials, 10, 37 2023

Challenge 3 of the 2022 NIST additive manufacturing benchmark (AM Bench) experiments asked modelers to submit predictions for solid cooling rate, liquid cooling rate, time above melt, and melt pool geometry for single and multiple track laser powder bed fusion process using moving lasers. An in-house developed Additive Manufacturing Computational Fluid Dynamics code (AM-CFD) combined with a cylindrical heat source is implemented to accurately predict these experiments. Heuristic heat source calibration is proposed relating volumetric energy density (ψ) based on experiments available in the literature. The parameters of the heat source of the computational model are initially calibrated based on a Higher Order Proper Generalized Decomposition- (HOPGD) based surrogate model. The prediction using the calibrated heat source agrees quantitatively with NIST measurements for different process conditions (laser spot diameter, laser power, and scan speed). A scaling law based on keyhole formation is also utilized in calibrating the parameters of the cylindrical heat source and predicting the challenge experiments. In addition, an improvement on the heat source model is proposed to relate the Volumetric Energy Density (VEDσ) to the melt pool aspect ratio. The model shows further improvement in the prediction of the experimental measurements for the melt pool, including cases at higher VEDσ. Overall, it is concluded that the appropriate selection of laser heat source parameterization scheme along with the heat source model is crucial in the accurate prediction of melt pool geometry and thermal measurements while bypassing the expensive computational simulations that consider increased physics equations.

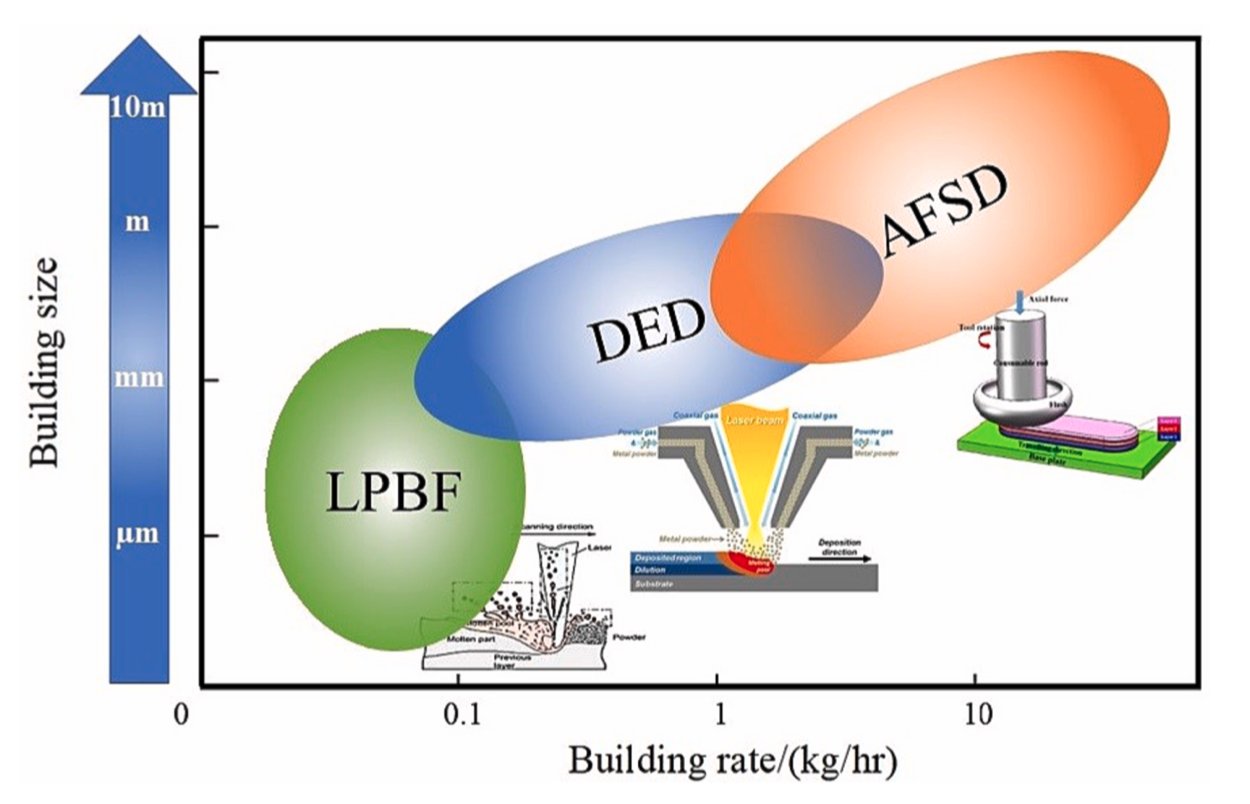

Additive friction stir deposition of metallic materials: process, structure and properties

Materials & Design, 234, 112356 2023

Additive friction stir deposition (AFSD) is a relatively new additive manufacturing technique to fabricate parts in solid state below the melting temperature. Due to the solid-state nature, AFSD benefits from lower residual stresses as well as significantly lower susceptibility to porosity, hot-cracking, and other defects compared to conventional fusion-based metallic additive manufacturing. These unique features make AFSD a promising alternative to conventional forging for fabricating large structures for aerospace, naval, nuclear, and automotive applications. This review comprehensively summarizes the advances in AFSD, as well as the microstructure and properties of the final part. Given the rapidly growing research in AFSD, we focus on fundamental questions and issues, with a particular emphasis on the underlying relationship between AFSD-based processing, microstructure, and mechanical properties. The implications of the experimental and modeling research in AFSD will be discussed in detail. Unlike the columnar structure in fusion-based additive manufacturing, fully dense material with a fine, fully-equiaxed microstructure can be fabricated in AFSD. The as-wrought structure brings the as-printed parts with comparable properties to wrought parts. The fundamental difference between AFSD and fusion-based metallic additive manufacturing will be summarized. Furthermore, the existing challenges and possible future research directions are explored.

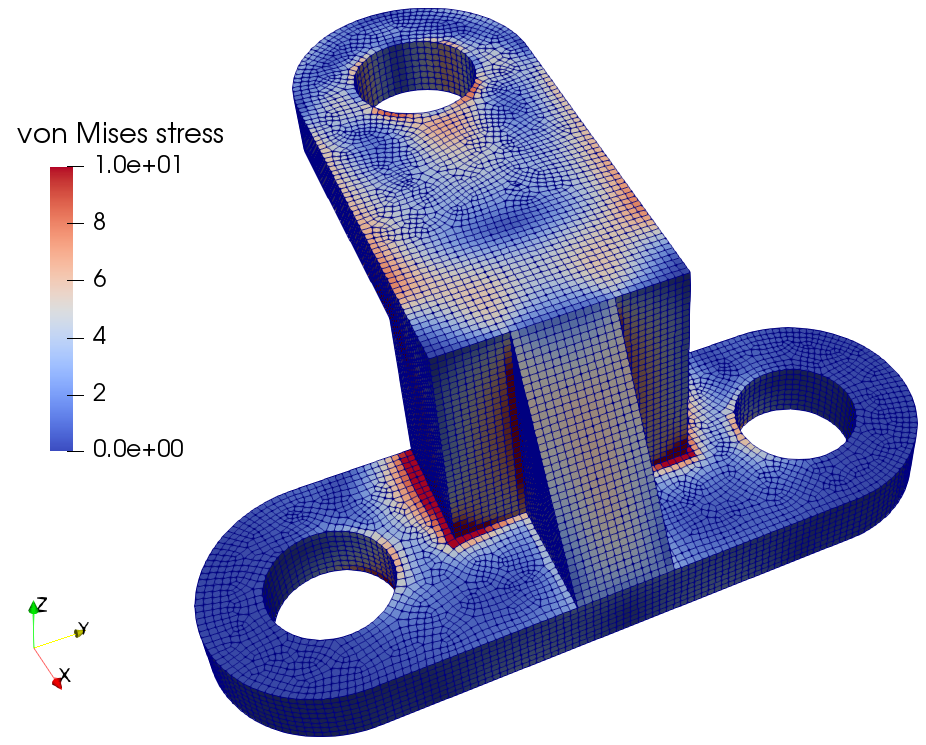

JAX-FEM: A differentiable GPU-accelerated 3D finite element solver for automatic inverse design and mechanistic data science

Computer Physics Communications, 108802 2023

This paper introduces JAX-FEM, an open-source differentiable finite element method (FEM) library. Constructed on top of Google JAX, a rising machine learning library focusing on high-performance numerical computing, JAX-FEM is implemented with pure Python while scalable to efficiently solve problems with moderate to large sizes. For example, in a 3D tensile loading problem with 7.7 million degrees of freedom, JAX-FEM with GPU achieves around 10× acceleration compared to a commercial FEM code depending on platform. Beyond efficiently solving forward problems, JAX-FEM employs the automatic differentiation technique so that inverse problems are solved in a fully automatic manner without the need to manually derive sensitivities. Examples of 3D topology optimization of nonlinear materials are shown to achieve optimal compliance. Finally, JAX-FEM is an integrated platform for machine learning-aided computational mechanics. We show an example of data-driven multi-scale computations of a composite material where JAX-FEM provides an all-in-one solution from microscopic data generation and model training to macroscopic FE computations. The source code of the library and these examples are shared with the community to facilitate computational mechanics research.

2022

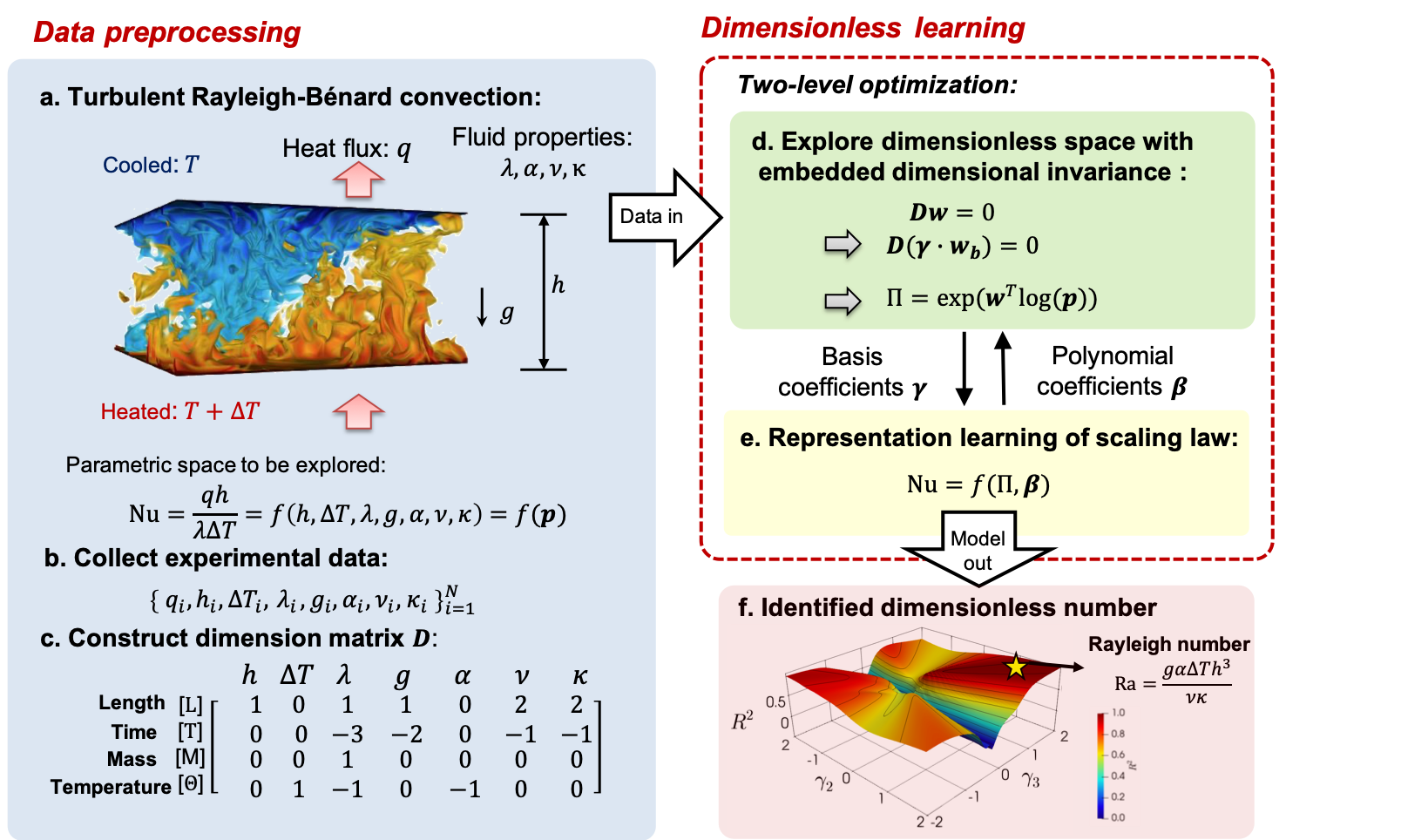

Data-driven discovery of dimensionless numbers and governing laws from scarce measurements

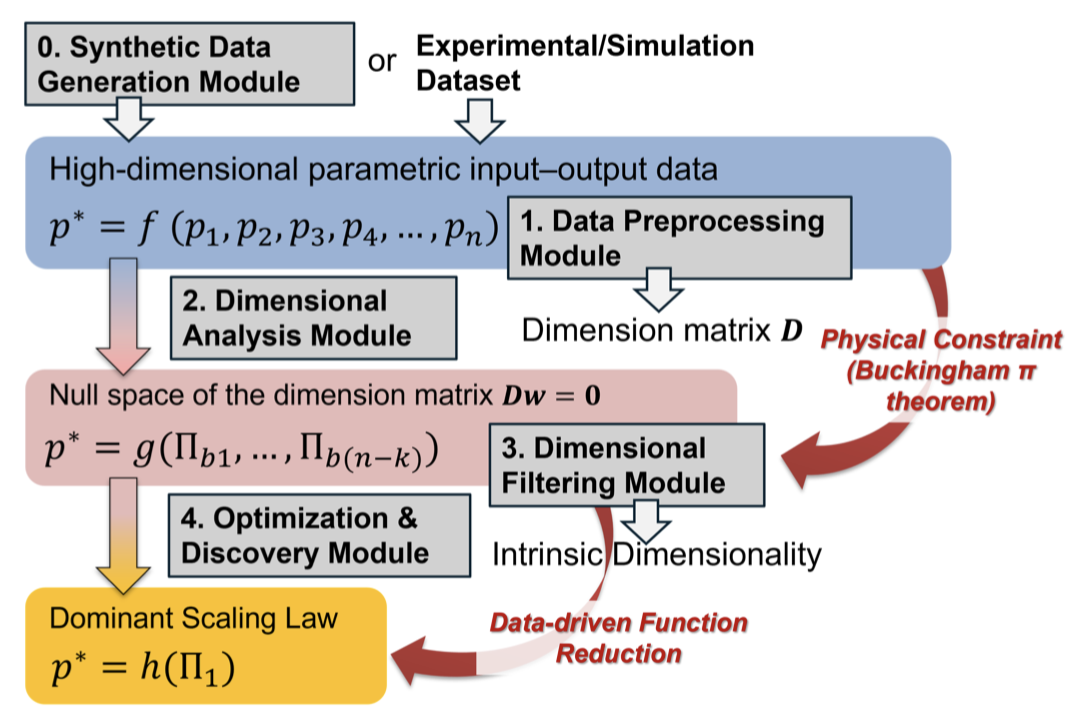

Nature Communications, 13, 7562 2022

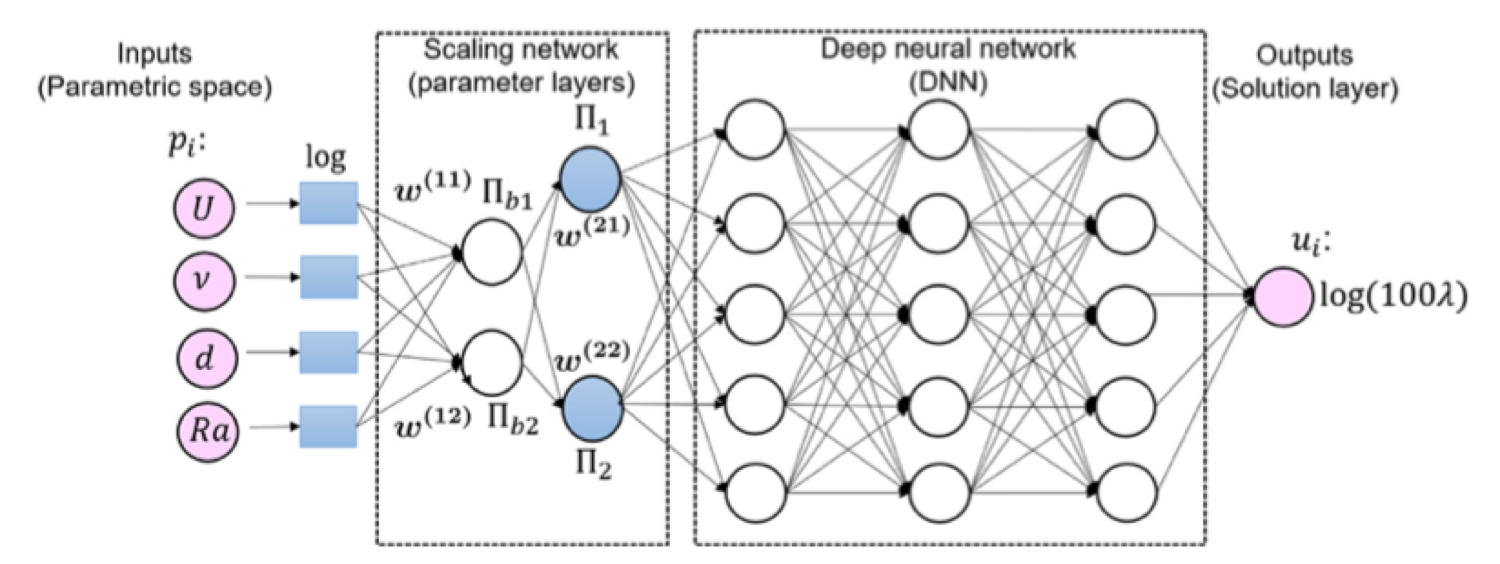

Dimensionless numbers and scaling laws provide elegant insights into the characteristic properties of physical systems. Classical dimensional analysis and similitude theory fail to identify a set of unique dimensionless numbers for a highly multi-variable system with incomplete governing equations. This paper introduces a mechanistic data-driven approach that embeds the principle of dimensional invariance into a two-level machine learning scheme to automatically discover dominant dimensionless numbers and governing laws (including scaling laws and differential equations) from scarce measurement data. The proposed methodology, called dimensionless learning, is a physics-based dimension reduction technique. It can reduce high-dimensional parameter spaces to descriptions involving only a few physically interpretable dimensionless parameters, greatly simplifying complex process design and system optimization. We demonstrate the algorithm by solving several challenging engineering problems with noisy experimental measurements (not synthetic data) collected from the literature. Examples include turbulent Rayleigh-Bénard convection, vapor depression dynamics in laser melting of metals, and porosity formation in 3D printing. Lastly, we show that the proposed approach can identify dimensionally homogeneous differential equations with dimensionless number(s) by leveraging sparsity-promoting techniques.

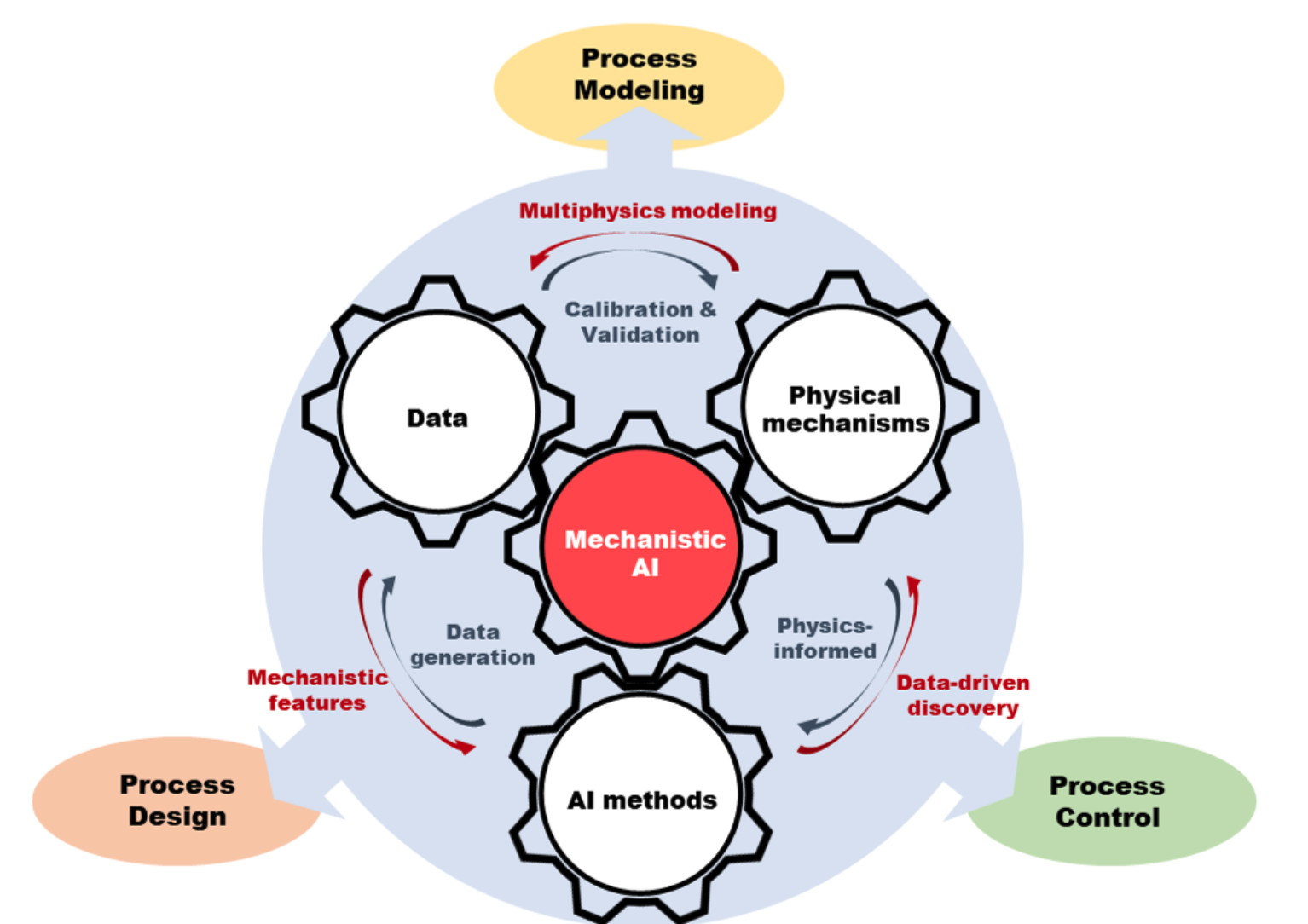

Mechanistic artificial intelligence (Mechanistic-AI) for modeling, design, and control of advanced manufacturing processes: Current state and perspectives

Journal of Materials Processing Technology, 117485 2022

Today's manufacturing processes are pushed to their limits to generate products with ever-increasing quality at low costs. A prominent hurdle on this path arises from the multiscale, multiphysics, dynamic, and stochastic nature of many manufacturing systems, which motivated many innovations at the intersection of artificial intelligence (AI), data analytics, and manufacturing sciences. This study reviews recent advances in Mechanistic-AI, defined as a methodology that combines the raw mathematical power of AI methods with mechanism-driven principles and engineering insights. Mechanistic-AI solutions are systematically analyzed for three aspects of manufacturing processes, i.e., modeling, design, and control, with a focus on approaches that can improve data requirements, generalizability, explainability, and capability to handle challenging and heterogeneous manufacturing data. Additionally, we introduce a corpus of cutting-edge Mechanistic-AI methods that have shown to be very promising in other scientific fields but yet to be applied in manufacturing. Finally, gaps in the knowledge and under-explored research directions are identified, such as lack of incorporating manufacturing constraints into AI methods, lack of uncertainty analysis, and limited reproducibility and established benchmarks. This paper shows the immense potential of the Mechanistic-AI to address new problems in manufacturing systems and is expected to drive further advancements in manufacturing and related fields.

2021

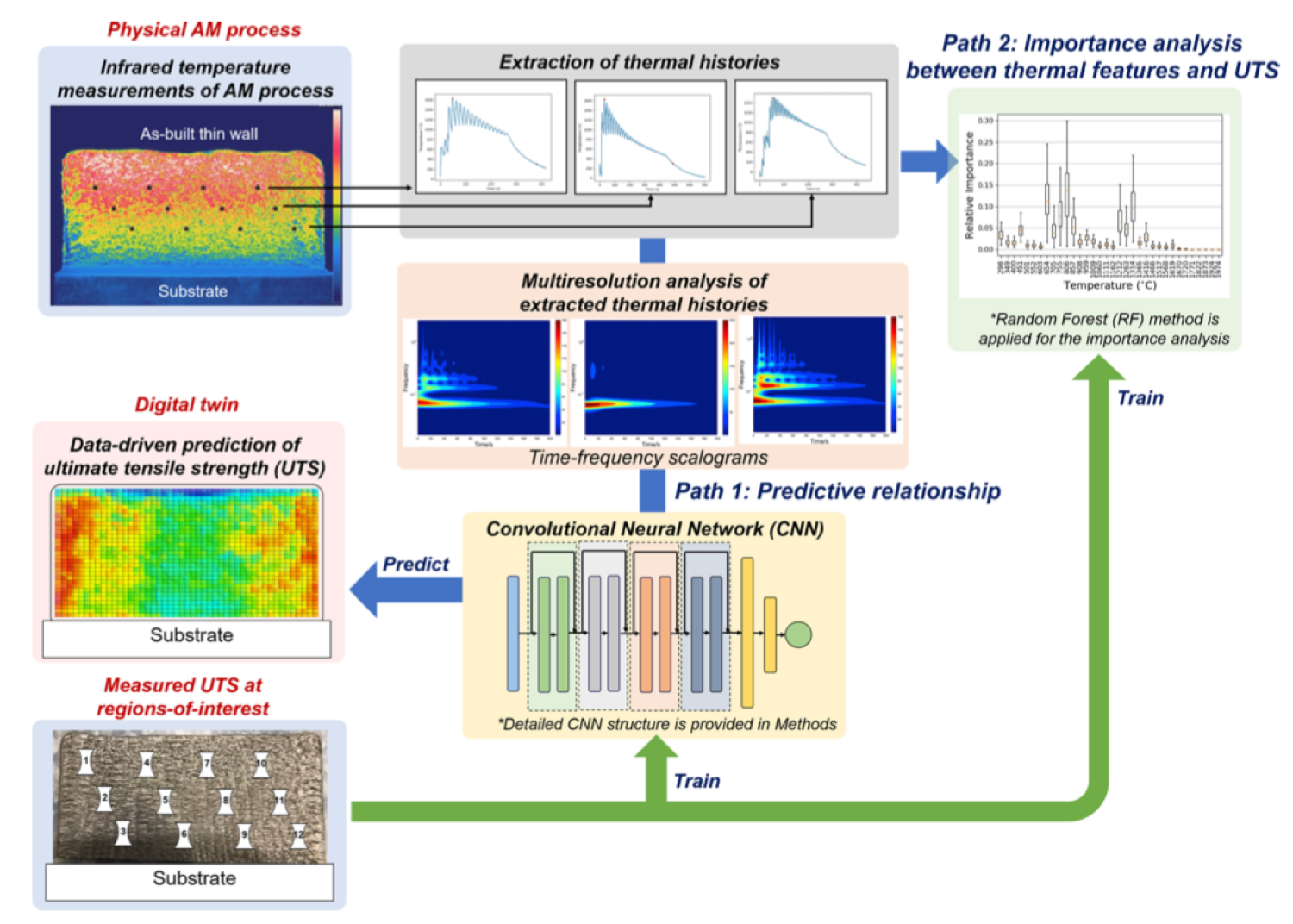

Mechanistic data-driven prediction of as-built mechanical properties in metal additive manufacturing

npj Computational Materials, 7(1), 1–12 2021

The digitized format of microstructures, or digital microstructures, plays a crucial role in modern-day materials research. Unfortunately, the acquisition of digital microstructures through experimental means can be unsuccessful in delivering sufficient resolution that is necessary to capture all relevant geometric features of the microstructures. The resolution-sensitive microstructural features overlooked due to insufficient resolution may limit one's ability to conduct a thorough microstructure characterization and material behavior analysis such as mechanical analysis based on numerical modeling. Here, a highly efficient super-resolution imaging based on deep learning is developed using a deep super-resolution residual network to super-resolved low-resolution (LR) microstructure data for microstructure characterization and finite element (FE) mechanical analysis. Microstructure characterization and FE model based mechanical analysis using the super-resolved microstructure data not only proved to be as accurate as those based on high-resolution (HR) data but also provided insights on local microstructural features such as grain boundary normal and local stress distribution, which can be only partially considered or entirely disregarded in LR data-based analysis.

Hierarchical Deep Learning Neural Network (HiDeNN): An artificial intelligence (AI) framework for computational science and engineering

Computer Methods in Applied Mechanics and Engineering, 373, 113452 2021

In this work, a unified AI-framework named Hierarchical Deep Learning Neural Network (HiDeNN) is proposed to solve challenging computational science and engineering problems with little or no available physics as well as with extreme computational demand. The detailed construction and mathematical elements of HiDeNN are introduced and discussed to show the flexibility of the framework for diverse problems from disparate fields. Three example problems are solved to demonstrate the accuracy, efficiency, and versatility of the framework. The first example is designed to show that HiDeNN is capable of achieving better accuracy than conventional finite element method by learning the optimal nodal positions and capturing the stress concentration with a coarse mesh. The second example applies HiDeNN for multiscale analysis with sub-neural networks at each material point of macroscale. The final example demonstrates how HiDeNN can discover governing dimensionless parameters from experimental data so that a reduced set of input can be used to increase the learning efficiency. We further present a discussion and demonstration of the solution for advanced engineering problems that require state-of-the-art AI approaches and how a general and flexible system, such as HiDeNN-AI framework, can be applied to solve these problems.

2019

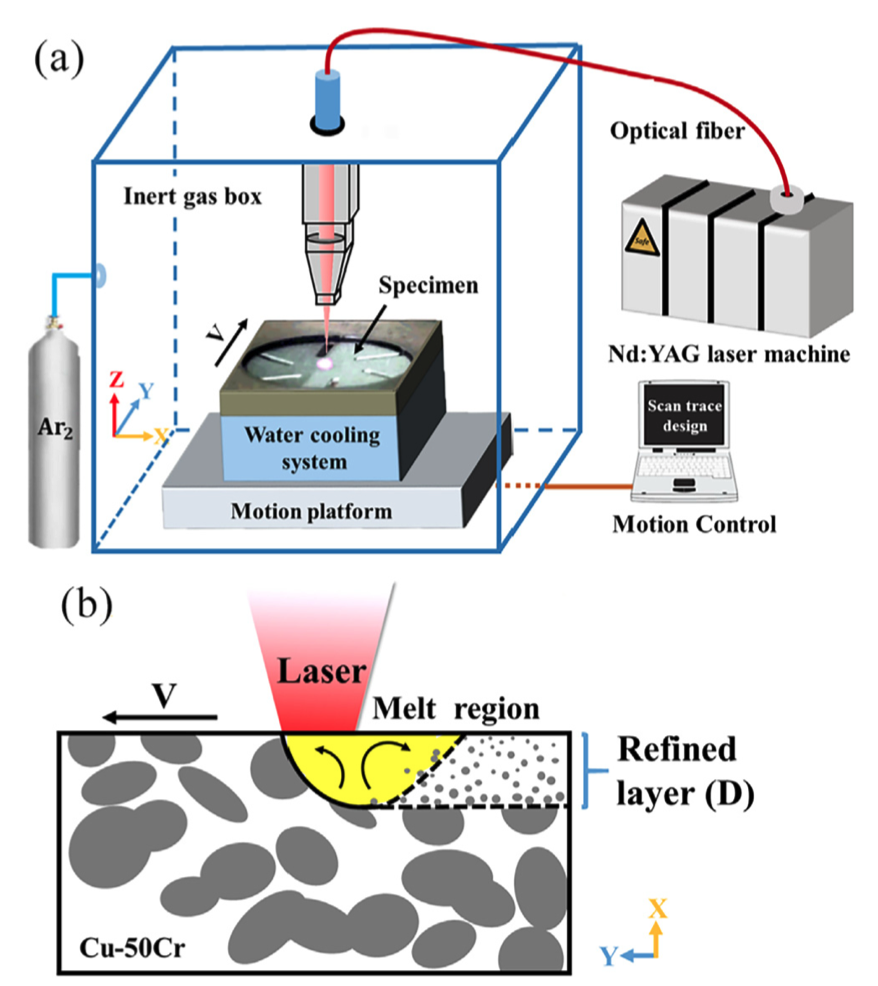

The effect of laser surface melting on grain refinement of phase separated Cu-Cr alloy

Optics & Laser Technology, 119, 105577 2019

Grain refinement and homogenization of Cr phase were achieved by laser surface melting (LSM) method, and the properties of Cu-Cr alloy were significantly improved. In this study, LSM of Cu-50Cr alloy (wt.%) was conducted with a high power density (10⁶–10⁷ W/cm²) laser beam, the microstructure and the properties of melt layer were investigated. The size of Cr phase was effectively refined from hundreds of micron scale to several micron scale, and the average size of Cr particles decreased to a few hundred nanometers. High cooling rate effectively inhibited coarsening effect on the Cr particles during liquid phase separation. Spherical Cr particles were dispersed in the melt layer with a thickness of 165 ± 20 μm. Microhardness was obviously enhanced and the maximum hardness was 232HV, which was 2.8 times that of the substrate. Arc duration of the LSM treated contacts increased up to 18%. The withstanding voltage of the fixed and the moving contact increased to 28.7% and 35.4%, respectively. The results show that LSM is an effective method to refine the microstructure of Cu-Cr alloy, and it is a promising modification method for electrical Cu-Cr vacuum contacts.

Talks

Data-driven discovery of dimensionless numbers and governing laws from scarce measurements

Scientific Machine Learning Webinar, Carnegie Mellon University

March 2023

Dimensionless learning for discovering new dimensionless numbers

16th U.S. National Congress on Computational Mechanics (USNCCM16), Virtual Event

July 25-29, 2021

Mechanistic digital twin of metal additive manufacturing

Mechanistic Machine Learning and Digital Twins for Computational Science, Engineering & Technology (MMLDT-CSET 2021), San Diego, USA

September 2021